This post is a transcript of my opening remarks at the a Great Debate held earlier today at the European Geosciences Union 2019 meeting in Vienna. The debate asked us to consider the question: What value should we place on contributions that cannot be easily measured?

Update (13/04/2019): A video of the whole debate is now available online. My opening remarks start at 20:43 but if you have time I would recommend listening to the whole session.

As scientists, measurement is what we do. It is how we have built our disciplines and won the admiration and respect of our peers and the public for the many wondrous ways in which we have illuminated the world.

It is only natural therefore that we would seek to turn our rulers and compasses on ourselves.

But while numbers have done so much to help us understand the natural world, they are far more difficult to apply to the more complex world of human affairs, science included.

So we are here today to debate this question because of the stresses and strains that an over-enthusiastic and ill-considered application of numbers – or metrics – in research evaluation has brought to the academy.

Now… when trying to figure my way out of a problematic situation, I like to think about death. And I would encourage you all do do the same.

This quotation is number 1 of the top five regrets of people who have reached the end of their lives (according to a book by former nurse Bronnie Ware). I suspect it resonates, perhaps a little uncomfortably, with many of us in this room.

The difficulty we face every day is how to be true to ourselves. How do we cling to our ideals and highest aspirations in a world that seems daily to distract us from them? This problem is perhaps particularly acute for academics because we need to forge a reputation for ourselves if we are to succeed in our careers.

And the problem with that, according to Thomas Paine, is that a reputation is what others think of us – how they evaluate us. It does not seem to be enough to be true to oneself. We have to convince others or our scientific worth.

And the problem with that, is that our system of research evaluation as a whole seems to have made us prisoners of numbers.

We have been captured by citation counts, JIFs, and h-indices. And while these indicators are not entirely devoid of information that might be of some utility in research evaluation, they have taken over to an extent that is dangerous to the health of scientists and to the health of science.

In brief we can list the problems with metrics:

- reduced productivity (as people chase JIFs in rounds of submission and resubmission)

- displacement of attention from other important academic activities

- focus on the individual that undervalues the role of teams

- positive bias in the literature – the reproducibility (or reliability) crisis

- focus on academic rather than real world impacts

- hyper-competition that preserves the status quo (what chance diversity?)

As Rutger Bregman writes: “Governing by numbers is the last resort of a country that no longer knows what it wants, a country with no vision of utopia.” We might say the same of academia.

Now it’s easy to be critical of the mis-use of metrics… it’s much harder to come up with solutions.

Bregman’s prescription for change is radical. He exhorts us to be “unrealistic, unreasonable, and impossible”.

And I completely agree. But I also think that to change the world we also have to be realistic, reasonable and think about what’s possible.

(What can I tell you? I’m a mess of contradictions.)

So it’s easy to be a critic. It’s harder to think through the problem of evaluation creatively and constructively. But that’s precisely what we need to do if we are to take a properly holistic approach.

There are already plenty of bright minds thinking about this.

The UMC Utrecht is one of a number of institutions that has reformed its hiring and promotion procedures to create practical space for qualitative elements in assessments. Applicants have to write a short structured essay addressing their contributions on 5 fronts:

- research

- teaching and mentorship

- dept citizenship

- clinical practice

- entrepreneurship and public engagement

This gives reviewers information in a structured, consistent and concise form that embraces quantitative and qualitative aspects of academic achievement. It is an honest and practical embrace of the principle the ‘science quality is hard to define and harder to measure’.

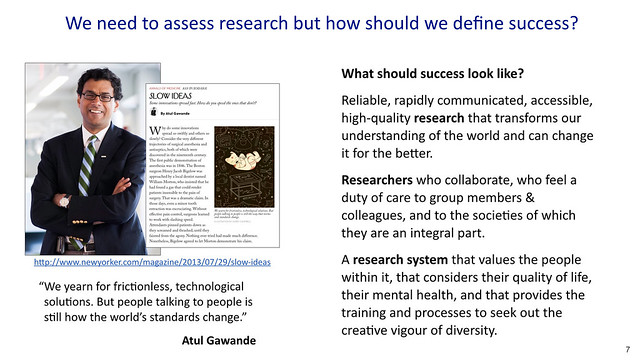

We are never going to escape the problem of research assessment – a world in which resources are finite demands it of us (to say nothing of public accountability). But while we will always be tempted by metrics – to the simplifying power that they seem to offer – we have to resist this. As Gawande writes: “We yearn for frictionless, technological solutions. But people talking to people is still how the world’s standards change.”

So I am glad that we are talking about this today. It is only through discussion – in good faith– of what really matters to us, through negotiation and and through the recognition of the importance of good judgement that we will come to a better definition of what success in science looks like.

I look forward to continuing that discussion in the Q&A.

Hi Steven,

Interesting and really thought provoking. I think the appealing thing about metrics is that comparing one number to another has a certain suggestion that ambiguity has been taken out of the equation. (4 papers is certainly more than 3!). The IF and such came in because of arguments that just counting sheer numbers hides a lot of other factors that should be considered. But, of course we can’t boil things down to objective numbers because there is still subjectivity in all of our evaluations because of the nature of the things we are trying to evaluate. How does one compare faculty candidates from different areas of research without subjectivity? Though of course the need to do this can lead to a lack of diversity– it is much easier to evaluate things you are familiar with than those that you are not in the absence of hard numbers.

I’d be interested to see if you can gain any traction on changing attitudes locally on this (within your department or within IC generally). The trend the last few years has been increasing the amount of metrics used, if anything, to include items beyond research metrics. At least we are looking beyond papers and grant income, but we are still trying to put hard numbers on everything.

As you know, I’m an applied researcher and I was formerly in a science department. In the last few years before I left the conversation at least shifted from, “When are you going to have a Nature paper?” to “When is industry going to adopt your discoveries?”. It shows something of a naive view of the nature of applied research and its timescales, but it at least shows some attempt to compare researchers in different disciplines by different criteria.

Thanks for the comment Karen. Two things: (i) one has to be careful to take account of the subjectivity (and bias) that is hidden within numbers; they might look ‘objective’ but they are not. Each citation is the result of a subjective choice by an individual; and patterns of citation are biased by considerations of gender that need to be taken into account. (ii) I think we should face up to the fact that evaluations of the various and complex activities of an academic life can only be done by subjective judgement; yes, comparing across disciplines is hard but it can be facilitated by inclusion of the requisite expert input, either from reviewers/referees or panel members.

I’m glad you are detecting some signs of movement at Imperial. That’s good to see but I don’t think we have yet rid ourselves of all our old, bad habits. We’re working on it though!