The new and improved Times Higher Education (THE) Impact Rankings 2020 were published this week with as much online fanfare as THE could muster. Unfortunately, they are not improved enough.

The Impact Rankings score participating universities on how well their activities contribute to the UN Sustainable Development Goals (SDGs), which range across issues such as poverty, gender equality, climate action, health and well-being, peace and justice. Although the compilation of the rankings is primarily motivated as a way to celebrate the real-world impact of what many universities do, a noble aspiration that I applaud, the core methodology remains unfit for purpose. At its centre, as with almost all rankings, there is an intellectual hollowness that undermines the whole project, and it is disappointing to see that the THE has yet to take responsibility for their methodological shortcomings. It is even more disappointing to see some universities abandon critical thinking in their rush to embrace the results.

In an article published just before the Impact rankings announced, Duncan Ivison, the Deputy Vince-Chancellor for Research at the University of Sydney, welcomed the increased focus on university activities that advance the SDGs. He notes that:

“there is now a remarkable global consensus on the importance of the 17 domains identified by the SDGs and the challenges we face in ensuring the well-being of our people and our planet. The framework provides a way for governments, industry, civil society and universities to consider how they can contribute to addressing these global challenges.”

I agree with Ivison that the attention brought to these important components of university missions is a useful contribution to a much wider debate within society about what governments, and the publics they represent, should expect of their institutions of higher learning.

Where I part company with Ivison is in the tenor of his caveats about the ranking process. His notes of caution are too lukewarm. He warns that too narrow a focus on impact (a common, though not entirely unreasonable, preoccupation of governments) risks undermining investment in curiosity-driven research that can have major but unanticipated impacts, and closes by conceding: “There are limits to what universities can do and the SDGs don’t capture everything about the impact of our research.”

They sure don’t. But the problems run deeper. When the 2019 Impact Rankings were published, I wrote a detailed critique that I think stands the test of time, so I won’t repeat the argument in detail. In essence, I identified three major problems of arbitrariness and incompleteness within the THE’s ranking methodology:

- Six of the seventeen SDGs were not included.

- The rankings are based on an overall score made up of four components: the score for SDG17 (‘Partnerships for the goals’ – a measure of collaboration and promotion of best practice in work towards SDGs) and the three highest scores that the university is awarded for any other SDG. The pragmatism in this approach is obvious, but it means that the overall scores are incommensurable – the THE is not comparing like with like.

- The score for each SDG is made up of an arbitrarily weighted tally of very different activity indicators (e.g. research, student numbers, policy development) that, as well as providing only approximate and incomplete evaluations of a rich spectrum of endeavour, are – again – incommensurable. I dissected the problematic nature of these tallies for SDG3 (Good health and well-being) and SDG12 (Responsible consumption and production) last year.

Only one of these issues has been addressed in the latest ranking. All 17 SDGs are now included, but the largest flaws in the process are untouched. As a result, the THE clings to a methodology that despite taking insufficient account of the false precision and the uncertainties introduced by the proxy nature of the indicators used to ‘measure’ actual performance, still claims to be able to distinguish universities on scores that differ by 0.1%. It is laughable to claim this level of precision. It is to universities’ discredit that they go along.

But there is an even more serious problem. Not one particle of the work of universities towards the SDGs, trumpeted so much by the Times Higher, counts towards their score in their World University Rankings, which the THE considers to be their ‘flagship analysis, […] the definitive list of the top universities globally’. These are the rankings that increasingly drive institutional behaviour – and competition between them. Each year’s announcement of the THE’s World Rankings is festooned with stories about this or that university rising or falling, winning or losing in the race to the top. The precise opposite of the collaboration that Ivison, waxing lyrical about the Impact rankings, points to as necessary for humanity to face our global challenges. When push comes to shove for the rankings that matter, the THE assigns impact a weight of precisely zero.

Cynically, one might suppose that part of the rationale for creating their impact rankings is to divert attention from the growing chorus of criticism of university rankings. That cynicism draws strength from the continued lack of response or action from rankers to valid criticisms of their methods. As I wrote last year, “Rankers need to embrace the full complexity and diversity of what universities do, while at the same time being more open about the uncertainties in the measurements and the incompleteness of their analyses.”

But I am not given to cynicism. I still believe that, at heart, many of the people involved in rankings work want the best for our universities and our world. The THE deserves credit at least for expanding the range of university activities that are publicly rated and they are by no means the only ranker that needs to engage with the sector with a great deal more rigour. But their present methods are still unsustainable, and my offer to work together to improve them stands.

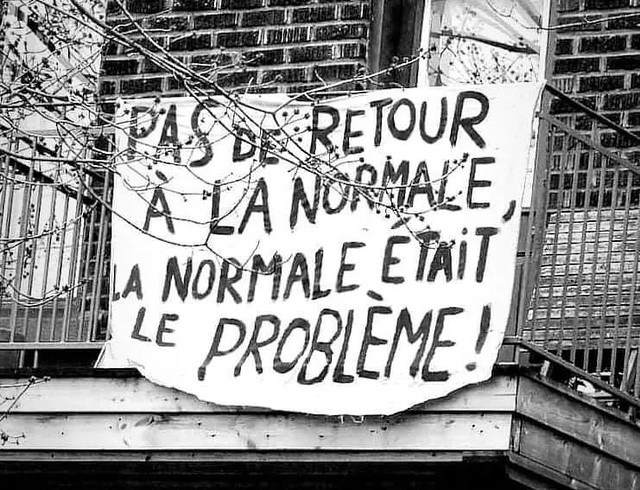

And indeed, the moment is opportune. Stranded and stalled as we all are by the COVID19 lockdown, we have a chance to reflect and rethink. The task before us aligns with broader economic and societal concerns that have been brought painfully into focus by our present predicament. Leading economists such as Paul Johnson and Mariana Mazzucato, and even the Financial Times are calling for fundamental changes to the workings of capital and the social contract. Their calls echo the longer-standing appeals of philosopher Michael Sandel and entrepreneur and writer, Margaret Heffernan, to recognise that our obsession with numbers, with performance, with efficiency, with the bottom line, and with ranking is obscuring the thing that really matters – the quality of people’s lives.