Last month I attended the IAML UK & Ireland Annual Study Weekend (ASW). IAML is the International Association of Music Libraries, and this is an event run each year by the UK & Ireland branch.

This was the first time I have attended a music library conference. I’m an old hand at libraries generally but a novice in terms of music libraries, so I had a curious mix of feelings. I felt a certain confidence but then kept remembering I have no experience in music librarianship. I know little of its history and I lack experience of what works, what’s been tried before, what all the factors are that influence how systems are set up as they are.

Leeds

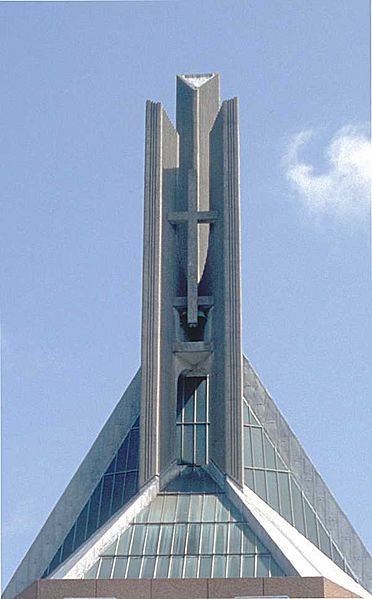

This year the ASW was held in Leeds. On the morning before the conference started I spent some time exploring the city and its 19th century glories – the huge covered market, the opulent shopping arcades, the town hall. Leeds is an impressive city.

The ASW organisers had arranged some library tours for delegates and I enjoyed seeing and learning about the history of the Leeds Library – this is a private library that was founded in 1768 with Joseph Priestley as it first Secretary. The tour gave insights into the social history of the city and the reading habits of its citizens. If you’re ever in Leeds I recommend a visit to this library – they have regular tours, or you can just visit as a guest at certain times of day.

The first talk of the ASW was about a book recently published on popular music in Leeds. It was given by three of the co-editors: Brett Lashua, Paul Thompson and Kitty Ross. The book, Popular Music in Leeds, brought together the perspectives of historians, community historians, sociologists, journalists and musicians and that mixture is reflected in its subtitle – “Histories, Heritage, People and Places”.

The three speakers described the book and the way it came about. In 2020 Leeds Museums & Galleries created an exhibition (curated by Kitty Ross) called ‘Sounds of our City’ to celebrate music in Leeds. This opened just before the COVID lockdown, so it was quickly turned into an online exhibition. It focused on places in Leeds where music of all sorts was made. By various twists and turns the exhibition inspired the book. An app is also under development which will map Leeds popular music venues and history, as well as images.

Leeds is home to the world-renowned triennial Leeds International Piano Competition (LIPC) and the 2024 event is already under way. In a wide-ranging presentation given by key staff of the LIPC we learnt about its history and the achievement of its founder, Fanny Waterman, in creating LIPC, and how efforts are now being made to address the gender gap.

Another session which focused on Leeds and its cultural heritage was the after-dinner session of archive and special collection ‘speed dating’. We moved around ten tables, each with a librarian and an item from their collection. They had three minutes to explain what the item was and what it signified. At the end we each voted for our favourite item, and then the winner was declared. This was a great session and left me wanting to know more about all the items. I am planning a separate blogpost about this.

Music librarianship

One of my aims in attending the IAML ASW was to learn more about the community of music libraries/librarians in the UK. The conference was a nice size – about 35 attendees – so it was easy to interact with most of the people there, and find out about their work. I also gained insights into a number of other interesting and/or inspiring tales from the broader music library world.

Three of the talks at the ASW gave insights into the work of music librarians. Peter Linnett described a raft of EDI initiatives at the Royal College of Music library. Sarah Lewis told us about her experience of moving into music librarianship, as Subject Librarian for the Creative Arts at University of Lincoln. She is developing a Libguide for the music dissertation module – it looks very good and thorough, focusing on the needs of the learner rather than on the resources. Charity Dove gave a very personal account of her 17 years working as subject librarian for music at Cardiff University. She didn’t shy away from describing some very challenging times. Her intense connection with and dedication to her user community shone through strongly.

It’s always good to hear about successful innovations and Hannah McCooke’s account of musical instrument lending in six Edinburgh public library branches was very inspiring. It’s also a reminder that not all music librarianship happens in places that are called music libraries. The Edinburgh scheme started in August 2022 and is a collaboration with the Tinderbox Collective – a collective of young people, musicians, artists and youth workers in Scotland. The scheme has already accumulated more than 300 instruments and in 2023 recorded over 900 loans. They have a musician-in-residence who offers tuition one day a week and puts on workshops. The scheme has reached hundreds of children, and adults too. They have a heap of testimonials and events under their belt. The scheme has spread beyond Edinburgh and I expect it will grow further.

The session of most direct interest to me was the one about the lending of vocal and orchestral sets in the UK, as I am volunteering in a library that lends sets to choirs and orchestras. Lee Noon, from the Leeds performing arts library, outlined the complexity of current provision and the pressures that choral and orchestral set collections face. Someone observed that provision of sets of scores is a national service that is run at a local or regional level. This makes it harder to provide a national strategy and achieve economies of scale.

How can we move to a more unified system of set lending? The Encore21 catalogue is a key piece of infrastructure, supported by IAML UK&Irl, but it needs to be made sustainable. It uses Koha technology which works well and is flexible, but Encore21 could be improved by adding a lending system to the catalogue. This would allow users to move easily from locating a set to effecting a loan. While desirable, this would be a big undertaking and would take some work to get agreement from all current Encore21 participants. Even agreeing a common pricing system could be very tricky. Someone suggested that the system should also cover wind band and brass band music, and should try to bring in more providers.

I wondered whether something like the UK Research Reserve would be helpful for music, to help manage holdings of rarely-requested music sets. Exploring that possibility would be another major project.

It was noted that a survey of current providers of music sets will be launched soon, and this will be useful alongside the results of the Encore21 user survey. I see that the Music Libraries Trust also ran a survey in 2020 and produced a report in 2022 that might guide thinking.

Ethics, diversity, archives

The session on cataloguing ethics was instructive and generated a lively discussion. It was good to hear research perspectives from Deborah Lee, a lecturer at UCL’s Department of Library & Information Studies with expertise in music knowledge organisation, and from Diane Rasmussen McAdie, Professor of Social Informatics at Edinburgh Napier University. Diane was a member of the Cataloging Ethics Steering Committee which drew up the Cataloguing Code of Ethics in 2021. Caroline Shaw (British Library) gave two very practical examples. In one project context notes were added to 200 catalogue records to flag up offensive language in song titles. In another case, pushing for inclusive language led to a change in an institution’s style guide.

Another talk, by Loukia Drosopolou, told us about an 18 month-long project to catalogue the archives of some women musicians – Harriet Cohen, Astra Desmond and Phyllis Tate. This is valuable work to increase representation and make resources available to music historians.

History was also the focus of Geoff Thomason’s talk about the friendship between Adolph Brodsky and Ferruccio Busoni. They got to know each other when they were both in Leipzig and kept up links when Brodsky moved to Manchester as a professor at the Royal Manchester College of Music (now the RNCM). Brodsky suggested to Busoni that he could move to Manchester to become professor of piano, but he declined. This detailed talk showed evidence of many hours spent researching in the RNCM archives to unearth the history between the two musicians.

IAML

Several sessions provided updates about IAML and the IAML UK&Irl branch, how they work, and what they do. There is a need to broaden membership to include people outside of libraries – someone said ‘Music is everywhere’ not just in libraries, so it would be good to reach out to other places where there are music collections. I think it would also be good to include people from the music publishing business, and from the digital music sector. I think that the inclusion of multiple points of view in the group can only be a good thing.

Janet Di Franco, the IAML UK&Irl branch president, gave us a good impression of the challenges ahead, and the need for us to get involved in the work of the group.

Overall I found it an interesting and engaging small conference and hope I will be able to attend another IAML ASW in the future.