Amongst the big news last week (besides the octopus-squid battle, a dress, and a singer falling over whilst – presumably – sober) was the release of an editorial from the journal “Basic and Applied Social Psychology” (BASP) which announced that it was banning p-values. There was much rejoicing by people who didn’t read the fine print. Because not only did the journal ban p-values it also banned confidence intervals and said it wasn’t particularly keen on Bayesian methods either. In other words, they pretty much banned any statistical analysis besides calculating means and drawing a few plots.

This lead to some mild twitter outrage amongst the cognescenti of statistical ecology:

Basic and Applied Social Psychology bans both p-values *and* confidence intervals. http://t.co/RkOsChVXpT HT @FidlerF #NoMoreStats

— Michael McCarthy (@mickresearch) February 24, 2015

@bolkerb you can’t directly observe uncertainty, it shouldn’t be reported. @mickresearch @FidlerF

— Dave Harris (@davidjayharris) February 24, 2015

@bolkerb @mickresearch @FidlerF the bane of my life is non-statisticians telling me they know more about f*cking stats than I do.

— Mark Brewer (@BulbousSquidge) February 24, 2015

The editors who wrote the editorial do have good intentions but they just don’t understand the issues.

They start by a firm statement:

From now on, BASP is banning the NHSTP [null hypothesis significance testing procedure].

Which may be going a bit far, but is also a defensible position. After all, p-values are evil, and this is one way of striking a blow for the Forces of Light. The problems come with what the editors do next:

Confidence intervals suffer from an inverse inference problem that is not very different from that suffered by the NHSTP. … Therefore, confidence intervals also are banned from BASP.

Yup. We can’t give estimates of uncertainty. Their argument I ellipsed out above reveals their muddled thinking:

Regarding confidence intervals, the problem is that, for example, a 95% confidence interval does not indicate that the parameter of interest has a 95% probability of being within the interval. Rather, it means merely that if an infinite number of samples were taken and confidence intervals computed, 95% of the confidence intervals would capture the population parameter. Analogous to how the NHSTP fails to provide the probability of the null hypothesis, which is needed to provide a strong case for rejecting it, confidence intervals do not provide a strong case for concluding that the population parameter of interest is likely to be within the stated interval.

This shows that their problems are not with significance tests themselves, but with the frequentist approach to statistics. In the world outside of basic and applied social psychology, a confidence interval does “provide a strong case for concluding that the population parameter of interest is likely to be within the stated interval” if you do the philosophical leg-work.

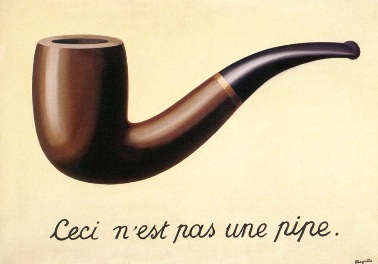

The key idea which makes frequentist statistics work is the idea that there is a parameter we are interested, say the average IQ of editors of psychology journals. We don’t know what the real value of this is, so we estimate it. The estimate is called the estimator (and what we are estimating is the estimand). At this point the frequentist can show their art knowledge:

“MagrittePipe” by Image taken from a University of Alabama site, “Approaches to Modernism”: [1]. Licensed under Fair use via Wikipedia.

The estimator is not the estimand, but hopefully it is a good estimator of it, and one can make probability statements about the parameter (such giving a probability that it is less than a certain value), at the cost of making the probability being of the data. In the frequentist interpretation, what we see is the data, which is random, so we can only make statements about that. Statistics, such as parameter estimates, are functions of the data, so statements about variability of the estimators have to be statements about variability of the data.

If one accepts the frequentist interpretation, then you have to accept that all your summaries are statements about the data, not the parameters. As most analyses are frequentist, the editors are ditching these analyses. This gets worse, as we’ll see.

The editors then go close to throwing the main alternative under the bus too:

Bayesian procedures are more interesting. The usual problem with Bayesian procedures is that they depend on some sort of Laplacian assumption to generate numbers where none exist. The Laplacian assumption is that when in a state of ignorance, the researcher should assign an equal probability to each possibility. … [W]ith respect to Bayesian procedures, we reserve the right to make case-by-case judgments, and thus Bayesian procedures are neither required nor banned from BASP.

The Laplacian assumption? No, Bayesian methods don’t depend on it. Indeed, any good Bayesian know that it can’t depend on it, because there is often no unique way of assigning equal probability, for example with variances one could assign such a prior to the variance, the standard deviation, the precision (1/variance), or the log of the variance. In reality, Bayesian methods rely on the assumption that a prior distribution represents the knowledge of the parameter(s) before the data are seen. It’s not clear what would happen if someone submitted a paper with informative priors, as the editors seem to think that this isn’t possible.

If we’re not allowed to be Frequentist, and only be Bayesian if we’re persuasive, what’s left? This:

However, BASP will require strong descriptive statistics, including effect sizes.

Wonderful! We can describe our data, and we can give effect sizes. But (a) we are not allowed to give measures of uncertainty around the effect sizes (OK, standard errors don’t look to be banned, but if confidence intervals say nothing, then surely standard errors, from which confidence intervals are often derived, must be equally suspect), and (b) these are purely descriptions of the data. If we are to assume they are something more, i.e that we are measuring something that can be extrapolated, then we have to assume that the descriptive statistics are measures of an underlying parameter. But by the same logic as above, if a confidence interval says nothing about the range of likely values of a parameter, then a point estimate (i.e. a descriptive statistic) says nothing about the best value. In other words we can say that the average IQ of the editors of psychology journals that we tested is 93 , but the logic of the BASP editors implies that we can’t use this to say anything about editors of psychology journals in general.

So what’s a social psychologist (whether basic or applied) to do? If it’s enough to just describe your data, you’re fine. But what if, say, you did an experiment? You then want to make inferences, but the BASP editors don’t like them. You do have a few choices, which all rely on varying degrees of bluffing:

- provide standard errors but not confidence intervals. Everyone will multiply by 2 to get their own confidence intervals anyway,

- be Bayesian, with informative priors and try to persuade the editors that this is OK.

- claim you’re using fiducial probability. The only person to understand this was R.A. Fisher, but that’s OK. You can simply cite one of his papers as a justification. The editors won’t underrstand the issues, so their decision will be just as arbitrary as it would be anyway.

If you try option 3, please tell me the results.

Even if we were all to convert to Bayesianism, we’re not all going to magically find informative priors where none existed before (if they allow uninformative priors, won’t we simply end up with the same estimate as under a frequentist framework?). And I’m not convinced this policy is going to allow researchers to develop these informative priors either.

Dunderheids.

I’m not sure the editors have even heard of informative priors.

Oh – and I couldn’t tell if Dave Harris was being facetious or not. But I’ve never observed a sampled individual corresponding exactly to a mean value either. So no point in reporting those. Medians, on the other hand, are the third finger.

Dave was either being facetious or sarcastic. Take your pick.

You didn’t read the editorial very carefully. All inferential stats are not banned. Just NHST. You can report standard errors. And if you’re not using CI’s as NHST, and you can report standard errors, there is no reason to use CI’s.

Ouch.

You didn’t read the blog post very carefully. In point 1 of my suggestions I specifically suggest using standard errors.

Just for cross-references, Lauren Sandhu over at the Journal of Ecology blog wrote about “The Decision” as well, especially what this means for Ecologists. Note Florian Hartig’s comment there – he’s got a point which is well valid in ecology.

On the whole, I think the discussion of “The Decision” has more value that the decision itself: it might bring some people to think about statistics, again.

To give you an idea: I’m a biologist with a terrible formal statistical training. What I think I know I learned by myself. I think I’m really terrible at doing and interpreting stats myself – but I’m often interacting with people who do high-impact stuff who actually think I’m better at stats than they are. This is mainly because I keep questioning their statistical assumptions, and I tried out a *lot* of different stuff (to no avail with my data, sadly). People just don’t do this. They joke about p-values, but often don’t even check if a method should only be applied if $assumption is met.

Sad, but true.

Thanks for the heads-up!

Most formal statistical training for ecologists is terrible, so you’re not alone. Questioning the assumptions is important, especially if you start looking for the answers (and not in Sokal & Rohlf!). I think all applied statisticians have horror stories they can tell.

Errrr, talking about assumptions: I wrote that its a post by Lauren Sandhu. In fact, the header in front of the text says it’s a guest post by Caroline Brophy.

My bad. Started reading below the line…